AI Developers Are Left in the Dark: Google's Controversial Move with Gemini

Photo by Solen Feyissa on Unsplash

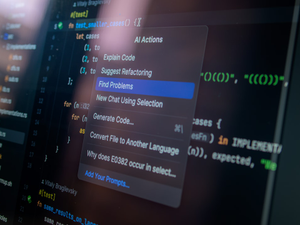

Tech giants are once again stirring controversy in the AI world, and this time, Google is at the center of the storm. The company recently decided to hide the raw reasoning tokens of its Gemini 2.5 Pro model, leaving developers frustrated and struggling to debug their applications.

Developers rely on these “Chain of Thought” (CoT) tokens to understand how AI models arrive at their conclusions. By removing this transparency, Google has effectively created a black box that makes troubleshooting nearly impossible. One developer on Google’s AI forum described being forced to “guess” why a model failed, leading to “incredibly frustrating, repetitive loops trying to fix things”.

The implications of this change extend far beyond simple debugging. Enterprises and developers use these reasoning chains to fine-tune prompts, create sophisticated AI workflows, and ensure the reliability of mission-critical systems. Without visibility into the model’s thought process, companies are left with less control and more uncertainty.

Google claims the change is “purely cosmetic” and aimed at creating a cleaner user experience. Senior product manager Logan Kilpatrick suggested that very few people actually read the detailed reasoning traces. However, the developer community sees this as a significant step backward in AI transparency.

Interestingly, AI experts like Subbarao Kambhampati from Arizona State University suggest that these reasoning tokens might not be as valuable as developers believe. His research indicates that the intermediate steps an AI model generates don’t necessarily provide meaningful insight into its actual problem-solving process.

The move also potentially serves a strategic purpose. By hiding raw reasoning traces, Google makes it harder for competitors to perform “model distillation” - a technique where smaller models can be trained to mimic more powerful ones.

As AI continues to evolve, the tension between user experience, model performance, and transparency will only grow. For now, developers are left wondering whether they’ll regain the debugging tools they’ve come to rely on, or if this represents a new, more opaque era of AI development.

One thing is clear: the AI community is watching closely, and Google’s decision could have far-reaching implications for how we understand and interact with artificial intelligence.

AUTHOR: tgc

SOURCE: VentureBeat