AI Fails Big Time During Hawaii Tsunami Alert: When Tech Goes Wrong

Photo by DavidErickson | License

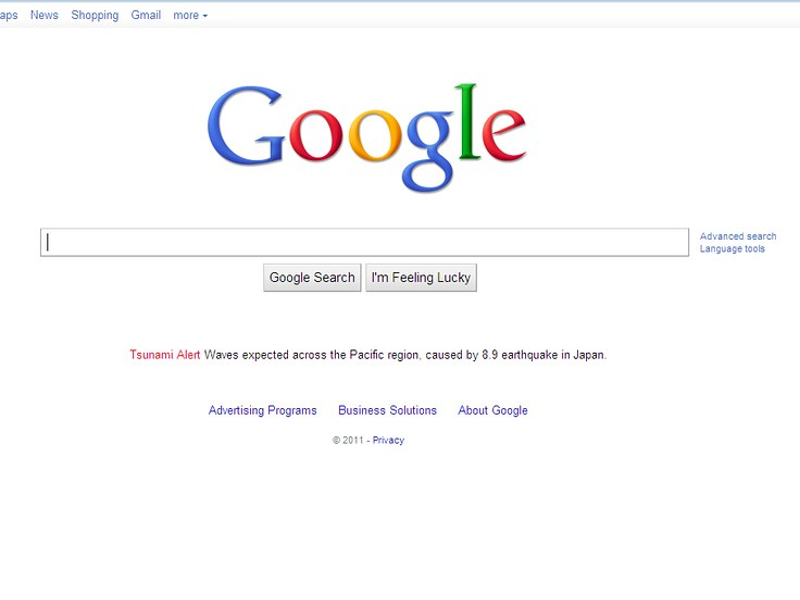

In a stark reminder of the limitations of artificial intelligence, recent events during a massive earthquake off Russia’s Pacific coast revealed some serious flaws in AI systems. When an 8.8 magnitude earthquake triggered tsunami warnings across the Pacific, residents of Hawaii, Japan, and North America’s West Coast turned to digital platforms for critical safety information.

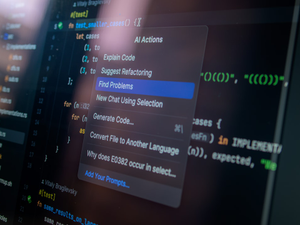

But instead of reliable guidance, users encountered potentially dangerous misinformation from AI chatbots like Grok, developed by Elon Musk’s xAI, and Google’s AI search overviews. Grok repeatedly claimed that Hawaii’s tsunami warning had been canceled when it was still active, incorrectly citing sources and potentially putting lives at risk.

The incident highlights growing concerns about the reliability of AI tools in emergency situations. While the tsunami ultimately subsided without major damage, the episode exposed significant vulnerabilities in how artificial intelligence processes and disseminates real-time information.

Users on social media platforms quickly called out these technological missteps. One user bluntly described AI as a “disaster for real-time events,” while others shared screenshots demonstrating the chatbot’s inaccurate claims. Some even directly tagged Elon Musk, criticizing Grok for spreading “life-endangering misinformation”.

Grok’s responses to these criticisms were minimal, with the chatbot simply stating, “We’ll improve accuracy”. Google, meanwhile, claimed that their AI overviews are designed to provide information from reliable sources, especially for high-stakes queries.

This incident serves as a critical wake-up call for tech companies developing AI technologies. While these tools promise convenience and instant information, they must be held to rigorous standards, particularly when public safety is at stake. The tsunami alert failure underscores the importance of human oversight and the potential dangers of blindly trusting artificial intelligence.

As AI continues to integrate into our daily lives, incidents like these remind us that technology is still fallible. Critical thinking and verification remain essential, especially during emergencies when accurate information can mean the difference between safety and potential harm.

AUTHOR: cgp

SOURCE: SF Gate