Tech Researcher Hacks OpenAI's AI Model to Unlock Unfiltered Language Generation

Photo by Andrew Neel on Unsplash

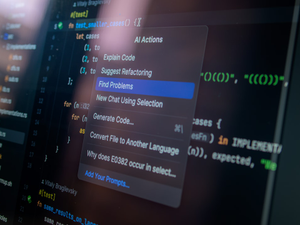

In a groundbreaking move that’s sending waves through the AI research community, Jack Morris, a Cornell Tech PhD student, has successfully transformed OpenAI’s GPT-OSS-20B model into a more unrestrained version called gpt-oss-20b-base.

Morris’s experiment reveals the intricate layers behind AI language models by stripping away the carefully constructed “reasoning” behaviors that typically make AI responses polite, safe, and structured. By applying a targeted low-rank adaptation technique to just three layers of the model, he essentially restored the model to a more raw, uncensored state.

The resulting model can now generate text without the typical guardrails, potentially producing responses that include instructions OpenAI’s original model would have refused, such as detailed descriptions of illegal activities or verbatim reproductions of copyrighted material. This breakthrough isn’t just a technical hack; it’s a significant exploration into how AI models store and generate knowledge.

What makes Morris’s work particularly fascinating is his nuanced approach. He didn’t simply try to “jailbreak” the model with clever prompts, but instead approached the challenge as an optimization problem. By training on about 20,000 documents and focusing on just 60 million parameters (a tiny fraction of the model’s 21 billion total), he managed to nudge the model back toward its original, less constrained state.

Morris is clear about the limitations of his work, emphasizing that he recovered the model’s output distribution, not its exact original weights. This transparency is crucial in an field where technical claims can often be sensationalized.

The implications of this research are profound. It provides researchers with a unique window into understanding how AI models are aligned, what information they retain, and how their behavior can be manipulated. For the tech community, it’s a reminder of the complex, layered nature of modern artificial intelligence systems.

As AI continues to evolve, experiments like Morris’s will be critical in understanding the nuanced mechanisms behind these increasingly sophisticated language models.

AUTHOR: kg

SOURCE: VentureBeat