AI Chatbots Are Causing Chaos in Bay Area Law Firms

Photo by Igor Omilaev on Unsplash

The legal world in the San Francisco Bay Area is experiencing a technological reckoning as artificial intelligence chatbots introduce unprecedented challenges to traditional legal practices. A recent incident involving a seasoned Palo Alto lawyer highlights the growing risks of relying on AI-generated information in professional settings.

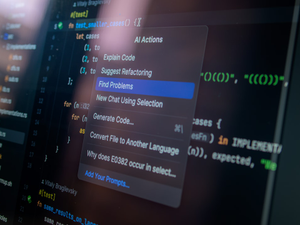

Lawyer Jack Russo found himself in an embarrassing situation after submitting court documents containing completely fabricated legal case citations, which he later admitted were generated by an AI chatbot. This scenario is becoming increasingly common, with lawyers across the United States facing serious professional consequences for uncritically using AI-generated content.

According to recent surveys by the American Bar Association, AI usage in law firms has dramatically increased, nearly tripling from 11% in 2023 to 30% in 2024. ChatGPT has emerged as the most widely adopted tool across firms of all sizes. However, this technological adoption comes with significant risks.

AI “hallucinations” – instances where chatbots generate completely fictional information – pose serious challenges. These errors can result in substantial professional penalties, including financial sanctions, disciplinary actions, and potential malpractice lawsuits. Some judges have already imposed fines up to $31,000 and have begun referring lawyers to disciplinary authorities.

Santa Clara University law professor Eric Goldman warns that the consequences extend beyond financial penalties. Judges might dismiss critical filings, reject entire cases, or fundamentally undermine a lawyer’s credibility. The stakes are particularly high in cases involving life-changing decisions like child custody or disability claims.

Despite these risks, experts like Goldman acknowledge AI’s potential benefits when used responsibly. The technology can help lawyers discover information they might otherwise miss and streamline document preparation. The key lies in maintaining rigorous verification processes and understanding AI’s limitations.

As the legal technology landscape continues to evolve, Bay Area law firms must develop robust protocols to prevent AI-generated misinformation from compromising their professional standards and client trust.

AUTHOR: pw

SOURCE: The Mercury News