ChatGPT's Privacy Blunder: When AI Sharing Goes Wrong

Photo by Solen Feyissa on Unsplash

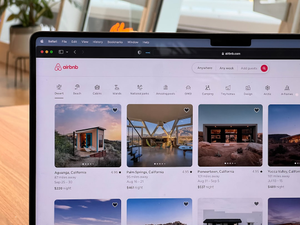

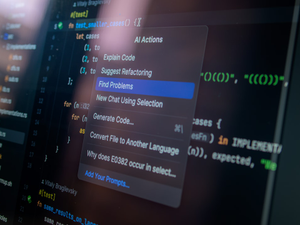

In a swift move that sent ripples through the tech world, OpenAI abruptly removed a feature allowing ChatGPT conversations to be discoverable through search engines after thousands of private chats were accidentally exposed online.

The experimental feature, which required users to opt-in and share their conversations, quickly backfired when people realized their intimate and often sensitive interactions were now searchable on Google. From personal health queries to professional resume rewrites, the unintended exposure highlighted the fragile boundaries of AI privacy.

OpenAI’s security team acknowledged the misstep, stating that the feature “introduced too many opportunities for folks to accidentally share things they didn’t intend to”. The incident reveals a critical challenge facing AI companies: balancing innovative features with robust privacy protections.

This isn’t an isolated incident. Other tech giants like Google and Meta have faced similar privacy fumbles with their AI platforms, suggesting a broader industry-wide issue. The rapid spread of the story across social media platforms demonstrated how quickly privacy breaches can escalate in the digital age.

For businesses and individual users, the episode serves as a stark reminder to carefully examine the privacy settings and potential risks of AI tools. The incident underscores the need for more thoughtful design of user interfaces and privacy controls.

As AI continues to integrate deeper into our personal and professional lives, these privacy challenges become increasingly critical. Companies must prioritize user trust and implement more sophisticated safeguards to prevent unintended data exposure.

Ultimately, the ChatGPT privacy incident is a wake-up call for the AI industry: innovation must never come at the expense of user privacy and security.

AUTHOR: kg

SOURCE: VentureBeat