AI's Copycat Problem: When Machines Start Learning From Each Other

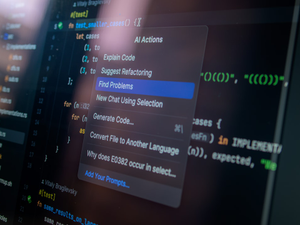

Photo by Cash Macanaya on Unsplash

In recent months, a growing number of developers and everyday users have expressed frustration with the latest generation of large language models. Despite promises of smarter, safer, and more capable systems, many say the outputs feel increasingly uniform: polished, predictable, and less creative than before. This trend isn’t just anecdotal. It may be a symptom of a deeper issue in the AI industry: a growing copycat problem. As leading companies race to match one another’s breakthroughs, the ecosystem risks collapsing into a self-referential loop, where models learn from each other instead of the world.

The competition among AI leaders has never been more intense. OpenAI, Anthropic, Google, DeepSeek, and others are locked in an arms race over performance, cost, and safety. Each major release triggers a wave of imitation: a new feature, safety policy, or model design that others quickly emulate. But the similarity isn’t limited to business strategy. The models themselves may also be converging. Recent reports surrounding DeepSeek, the Chinese AI company that made international headlines earlier this year, suggest it trained on content derived from OpenAI’s ChatGPT models, raising more ethical and legal questions about how data is sourced in the global AI ecosystem. Every large model today learns by consuming the internet: the sum of our posts, articles, images, and code. The concern is simple but profound: if AI-generated text, code, and art continue to flood the internet (and future models train on that same data) the next generation of models will increasingly learn from other AIs, not humans. This creates a dangerous self-reinforcing feedback loop known as model collapse. As more of the internet’s content comes from AI, new models trained on that data are increasingly learning from… other models. It’s like a photocopy of a photocopy, crisp edges at first, but each generation gets blurrier. And it’s not just the data. The ideas, tone, and user experiences are flattening too. Ask five different chatbots the same question today, and you’ll likely get five variations of the same polished, polite, over-explained paragraph.

While this issue feels novel, history offers perspective. In the mid-2010s, Silicon Valley experienced a similar wave of optimism around conversational technology. The chatbot boom of 2015–2016 promised to redefine digital interaction. Platforms like Facebook Messenger, Slack, and Telegram opened their ecosystems to bot developers. Every startup suddenly claimed they were “redefining conversation”. VC money poured in left and right. Everyone wanted a bot for customer service, for pizza orders, for therapy. Even for love. But when the dust settled, the “next big thing” turned out to be… well, not so big. The bots were underwhelming. They couldn’t hold context, misunderstood basic intent, and often frustrated users more than they helped. It was a classic case of hype sprinting miles ahead of capability. Even the big players couldn’t escape the crash. Facebook’s much-hyped M assistant was quietly shut down. Microsoft’s Tay bot infamously turned racist within 24 hours. And thousands of smaller “AI chatbot startups” folded as quickly as they’d appeared. The pattern was clear: when the experience doesn’t match the expectation, users tune out. Fast.

What’s different now is that AI finally works. At least sort of. Today’s models are orders of magnitude smarter, more flexible, and can generate code, art, and essays in seconds. But some of the same warning signs are flickering again: overpromising, underdelivering, and chasing hype cycles instead of real progress. As David Pichsenmeister, an AI industry veteran who has been trhough this hype cycle before, observes: “The chatbot boom didn’t fail because the idea was bad. It failed because the experience fell short. We’re in a similar moment now. The technology is more advanced, but expectations are even higher”.

In the rush to deploy increasingly capable models, AI companies face a difficult trade-off between safety, originality, and performance. OpenAI and Anthropic have taken a strong stance on alignment and trust, emphasizing models that are less likely to produce harmful or inaccurate content. That focus is understandable, especially given past controversies over bias and misinformation. But if models keep getting safer but less interesting, and competitors keep copying features instead of innovating, the industry risks entering its Chatbot 2.0 era: shiny on the outside, hollow on the inside.

That doesn’t mean AI needs to abandon safety. It needs to balance it with intelligence, adaptability, and critical reasoning. The real danger isn’t a rogue model saying something offensive. It’s a future where every AI says the same thing, in the same tone, without thinking critically about context. If these models keep learning from themselves, they risk spiraling into data inbreeding, a creative collapse where originality fades and bias compounds. Without strong oversight, transparency, and innovation in how training data is sourced, AI may end up learning from the worst teacher possible: its own recycled output. The challenge is to make AI smarter and safer simultaneously. We need models that can reason deeply without repeating errors, and generate novel ideas without compromising integrity. Achieving that balance requires more than better algorithms. It demands careful oversight of how models are trained, evaluated, and deployed.

The DeepSeek controversy underscores a growing risk of data contamination across AI ecosystems. If developers increasingly rely on synthetic data (or on datasets already influenced by AI outputs) the industry could face a long-term degradation in quality. This isn’t just a technical issue, it’s a question of sustainability. Without high-quality, human-generated data, future models could lose the richness and unpredictability that make them valuable. The lesson from the chatbot era remains relevant: technological revolutions often fail not because the ideas are wrong, but because the execution and user experience fall short. The AI industry now stands at a similar crossroads, one where the pursuit of growth and imitation could come at the cost of originality and trust.

AI has evolved from a tool that imitates human intelligence to one that risks imitating itself. The next phase of innovation will depend on breaking that loop: diversifying training data, emphasizing transparency, and rewarding models that think rather than repeat. As David Pichsenmeister puts it: “We wanted AI to learn from humanity, not from a reflection of its own reflection. If we lose the human element, what exactly are we teaching it?”. If the industry can strike the right balance between safety, creativity, and authenticity, it can avoid the stagnation that doomed past waves of hype. If not, the future of AI may look less like a revolution and more like an echo chamber.

AUTHOR: mei